Learning Dynamic Siamese Network for Visual Object Tracking

1Qing Guo 1Wei Feng* 1Ce Zhou 1Rui Huang 2Liang Wan 3Song Wang

1School of Computer Science and Technology, Tianjin University 2School of Computer Software, Tianjin University 3University of South Carolina, Columbia, SC 29208, USA

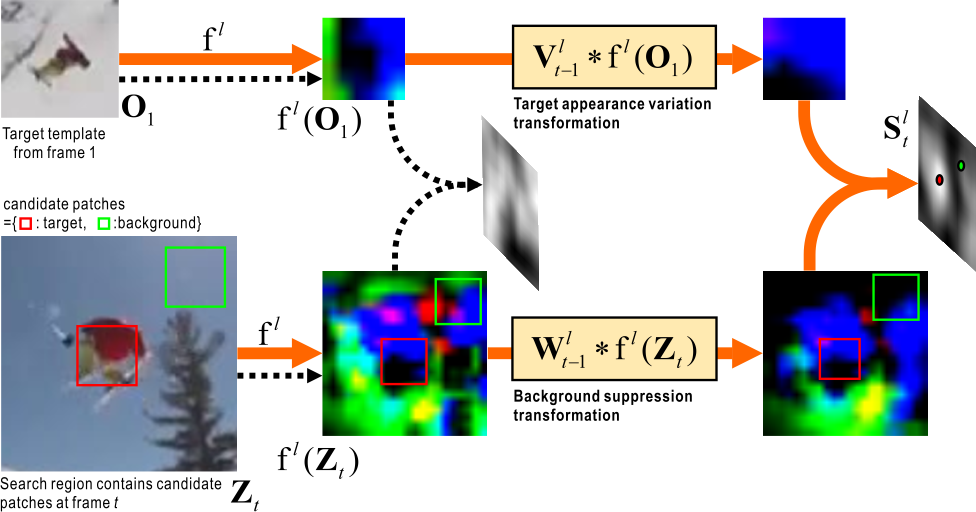

Figure 1. Basic pipelines of our DSiam network (orange line) and the SiamFC(black dashed line). $ \mathrm{f}^{l}(\cdot) $ represents a CNN to extract the deep feature at $l$th layer. We add the target appearance variation ($\mathbf{V}_{t-1}^{l}$) and background suppression ($\mathbf{W}_{t-1}^{l}$) transformations for two branches respectively. Two transformations are rapidly learned from frame $t-1$. When the target at frame $t$ (red box) is entirely different from $\mathbf{O}_1$, SiamFC gets a meaningless response map, within which no target can be found. In contrast, our method captures the target successfully.

Abstract

How to effectively learn temporal variation of target appearance, to exclude the interference of cluttered background, while maintaining real-time response, is an essential problem of visual object tracking. Recently, Siamese networks have shown great potentials of matching based trackers in achieving balanced accuracy and beyond realtime speed. However, they still have a big gap to classification & updating based trackers in tolerating the temporal changes of objects and imaging conditions. In this paper, we propose dynamic Siamese network, via a fast transformation learning model that enables effective online learning of target appearance variation and background suppression from previous frames. We then present elementwise multi-layer fusion to adaptively integrate the network outputs using multi-level deep features. Unlike state-of-theart trackers, our approach allows the usage of any feasible generally- or particularly-trained features, such as SiamFC and VGG. More importantly, the proposed dynamic Siamese network can be jointly trained as a whole directly on the labeled video sequences, thus can take full advantage of the rich spatial temporal information of moving objects. As a result, our approach achieves state-of-the-art performance on OTB-2013 and VOT-2015 benchmarks, while exhibits superiorly balanced accuracy and real-time response over state-of-the-art competitors.

Introduction

In this paper, we show that reliable online adaptation can be realized for matching based tracking. Specifically, we propose dynamic Siamese network, i.e. DSiam, with a fast general transformation learning model that enables effective online learning of target appearance variation and background suppression from previous frames. Since the transformation learning can be rapidly solved in FFTdomain closed form, besides the effective online adaptation abilities, it is very fast and indeed serves as a single network layer, thus can be jointly fine-tuned with the whole network. Our second contribution is elementwise multi-layer fusion, which adaptively integrates the multi-level deep features of DSiam network. Third, beyond most matching based trackers whose matching models are trained on image pairs, we develop a complete joint training scheme for the proposed DSiam network, which can be trained as a whole directly on labeled video sequences. Therefore, our model can thoroughly take into account the rich spatial temporal information of moving objects within the training videos. Extensive experiments on real-world benchmark datasets validate the balanced and superior performance of our approach.

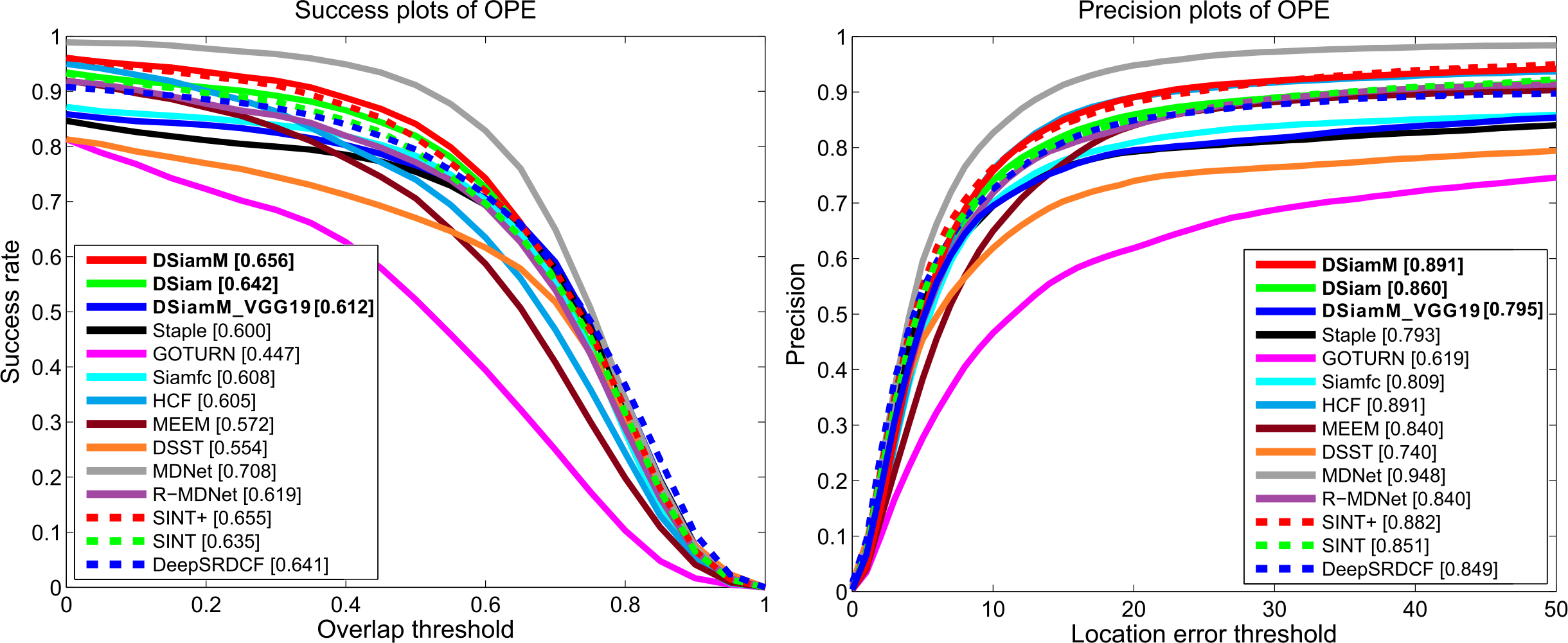

Results on OTB-2013

Success and precision plots of OPE (one pass evaluation) on OTB-2013. The numbers in the legend indicate the areaunder-curve (AUC) score for success plots and the representative precisions at 20 pixels for precision plots, respectively.

Demo

Challenge video in which target is surrounded by clustered background and similar objects.

Source Code

Source Code (Matlab) and Models: [ Code][ OTB-100 results][Model Coming Soon]Citation - BibTeX

Learning Dynamic Siamese Network for Visual Object Tracking

Qing Guo, Wei Feng, Ce Zhou, Rui Huang, Liang Wan, Song Wang. Learning Dynamic Siamese Network for Visual Object Tracking.

In ICCV 2017.(CCF-A).

[ PDF ]

[ Supplementary ]

[ BibTeX ]